Thursday January 9th, 2020

IAM Roles for EKS Service Account

Allowing application in the pod to have an associated IAM role was the most demanding feature and AWS finally made it available. So basically, EKS now natively supports IAM permissions for Kubernetes Service Account.

Before diving into on how to setup a Kubernetes pod using AWS IAM role to perform certain AWS API calls, let us briefly highlight the following different components required into make all this work.

Amazon EKS stands for Elastic Kubernetes Service which is a fully managed kubernetes service. There are different ways to bootstrap a Kubernetes cluster using Amazon EKS. And some of those ways are: EKS Fargate, cluster fully managed by AWS therefore no need to manage the Kubernetes EC2 worker nodes, Managed Node Groups (Kubernetes Data Plane) makes it easy to spin up worker nodes via an autoscaling group like the control plane, AWS will take care of things ranging from security patching, Kubernetes version update, monitoring and alerting. Finally a manual bootstrapping of the worker nodes is also an option meaning you are responsible for those worker nodes (except the EC2 instances themselves according to AWS shared responsibility model). This sum up a bit how one can bootstrap a Kubernetes cluster in AWS using Amazon EKS. More on Amazon EKS, please visit these below:

- Amazon EKS Service: https://aws.amazon.com/eks/

- Managed Node Groups: https://aws.amazon.com/blogs/containers/eks-managed-node-groups/

- EKS Fargate: https://aws.amazon.com/blogs/aws/amazon-eks-on-aws-fargate-now-generally-available/ and this can also be an interesting read on AWS Fargate/Serverless https://aws.amazon.com/blogs/opensource/firecracker-open-source-secure-fast-microvm-serverless/

AWS IAM stands for Identity and Access Management which in a nutshell allow secure access to AWS services and resources. In this particular post, we will be interested on the federation identities because it make sense why IAM supporting external identity providers was a game changer. As example, organisations more often already use third party tools to manage their identities (User for instance) which sometime can be an important blocker for their transition to AWS. Hence the support for external identity providers. From the moment I am writing this blog there are two types of supported IdPs in AWS IAM namely OpenID Connect (OIDC) and Assertion Markup Language (SAML). But in this post, we will focus on OIDC since IAM role support for Amazon EKS relies on it. OIDC is a OpenID 2.0 refinement with some improvement on the API and more added features for identity management and uses JSON Web Token (JWT). More on how this work with Amazon EKS will be explained later in this post. For more detailed documentation please visit:

Prior to Amazon EKS IAM support

Prior to this feature release, different ways were used to get EKS pods to have access to AWS API. Let us go through some of those ways.

Always extending Amazon EKS worker nodes IAM role policies to accommodate the need of accessing AWS API has been the easiest way to allow EKS pod to make AWS API calls. And the problem with this approach is that there is no isolation at the container level and any container running on the worker node will inherit the same set of IAM permissions and therefore gained access to AWS services. From a security standpoint, this violates the least privilege principle.

And to tackle the issue described above, third party tools have been adopted to reach the container level isolation, for example kube2iam/kiam. One of the solutions in kube2iam was to proxy all requests to EC2 metadata to a container running in all worker nodes (kube2iam daemonset) which in turn will retrieve temporary credentials and returns it to the caller. For more on kube2iam and kiam, please visit:

- kube2iam: https://github.com/jtblin/kube2iam

- kiam: https://github.com/uswitch/kiam

Using this new Amazon EKS feature, no need to extend the worker node IAM role policies, one can with this feature reach the container level isolation without requiring a third party tool. More importantly with this feature via Kubernetes service account will be audited by CloudTrail meaning CloudTrail will log the service account using IAM roles.

Now we have at least a glance on why this feature was so waited by the AWS EKS users.

How does it work

I will just cover here the big picture on how the different dots are connected to each other. For more in-depth please review the AWS documentation: https://docs.aws.amazon.com/eks/latest/userguide/iam-roles-for-service-accounts.html

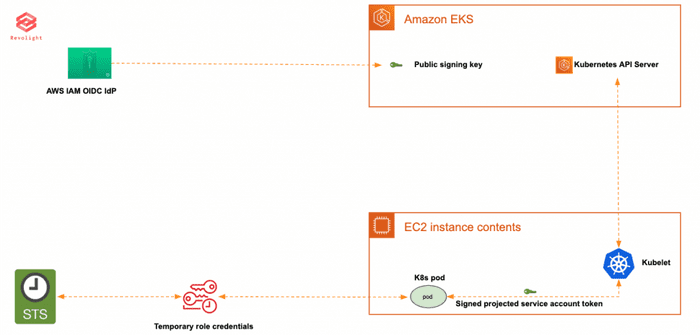

Let's go through the flow of the signed projected service account token.

In Kubernetes 1.12, the support for projected service account token was added which is an OIDC JSON Web Token (JWT) containing the service account identity, the duration of the token as well as the desired audience. In a simpler term, the projected service account token is used in order to be able to be validated by third party credentials provider and in this case AWS IAM. Basically a pod receives a cryptographically signed OIDC token which in turn will be validated/verified by STS against the OIDC IdP (as for a small note AWS updated its SDKs by adding new credentials provider calling STS:AssumeRoleWithWebIdentity). Basically, in EKS via an admission controller the AWS session credentials are injected into the pod of the IAM Role annotated on the Service Account and therefore the credentials are exposed through environment variables AWSROLEARN and AWSWEBIDENTITYTOKENFILE which I will demonstrate in the demo below.

A brief contrast between service account token and projected service account token. A service account has been Kubernetes owns internal identity system allowing pods/clients to communicate with Kubernetes API server via the service account token and which could only be validated by Kubernetes API server. But as explain above in Kubernetes 1.12 the project service account feature was added which is basically an OIDC JSON Web Token and can be validated by third party credentials provider like AWS STS service for example.

Demo: hands on using eksctl

Minor note, please replace REDACTED with the right value for your use case.

eksctl is CLI tool written in Go and uses CloudFormation to create and setup Amazon EKS cluster. Make sure eksctl is installed and for more information on how to install it, please visit: https://github.com/weaveworks/eksctl

In this demo hands on, I will create the following:

- Kubernetes cluster with one worker node

- IAM OIDC provider

- Kubernetes Service Account annotated with an IAM policy

- And Finally a pod to test accessing some AWS services

Create a kubernetes cluster

In case, you already have a running cluster, you can skip this step. By default, eksctl will create a dedicated VPC to avoid clashing with any existing resources. You can also create a YAML configuration file for eksctl to specify how you would like to create the cluster. As the example below.

---

apiVersion: eksctl.io/v1alpha5

kind: ClusterConfig

metadata:

name: eks-iam

region: eu-north-1

vpc:

id: "VPC-ID"

cidr: "VPC-CIDR"

subnets:

private:

eu-north-1a:

id: "private-subnet-ID-in-eu-north-1a"

cidr: "SUBNET-CIDR-in-eu-north-1a"

eu-north-1b:

id: "private-subnet-ID-in-eu-north-1b"

cidr: "SUBNET-CIDR-in-eu-north-1b"

eu-north-1c:

id: "SUBNET-CIDR-in-eu-north-1c"

cidr: "SUBNET-CIDR-in-eu-north-1c"

managedNodeGroups:

- name: managed-1

instanceType: t3.small

minSize: 1

desiredCapacity: 1

maxSize: 1

availabilityZones: ["eu-north-1a", "eu-north-1b", "eu-north-1c"]

volumeSize: 20

ssh:

allow: true

publicKey: "ssh-rsa ********"

sourceSecurityGroupIds: ["sg-***********"]

labels: {role: worker}

tags:

nodegroup-role: worker

iam:

withAddonPolicies:

externalDNS: true

certManager: true

cloudWatch:

clusterLogging:

enableTypes: ["audit", "authenticator", "controllerManager"]For more on how to customize eksctl, please visit, https://github.com/weaveworks/eksctl/tree/master/examples

But for this demo, I will be using the default setup for eksctl.

$ eksctl create cluster eks-iam --region eu-north-1

[ℹ] eksctl version 0.11.1

[ℹ] using region eu-north-1 Now verify that the cluster has been properly created.

# List EKS cluster

$ aws eks list-clusters --region eu-north-1

{

"clusters": [

"eks-iam"

]

}Create IAM OIDC provider for EKS

$ eksctl utils associate-iam-oidc-provider --name eks-iam --approve

[ℹ] eksctl version 0.11.1

[ℹ] using region eu-north-1

[ℹ] will create IAM Open ID Connect provider for cluster "eks-iam" in "eu-north-1"

[✔] created IAM Open ID Connect provider for cluster "eks-iam" in "eu-north-1"Describe EKS cluster in conjunction with query the cluster OIDC issuer

$ aws eks describe-cluster --name eks-iam --query cluster.identity.oidc.issuer --region eu-north-1

"https://oidc.eks.eu-north-1.amazonaws.com/id/******REDACTED*****"Create Kubernetes service account with an IAM policy attached

$ eksctl create iamserviceaccount --name eks-iam-test --namespace default --cluster eks-iam --attach-policy-arn --region eu-north-1 arn:aws:iam::aws:policy/ReadOnlyAccess --approve --override-existing-serviceaccounts

[i] eksctl version 0.11.1

[ℹ] using region eu-north-1

[ℹ] 1 iamserviceaccount (default/eks-iam-test) was included (based on the include/exclude rules)

[ℹ] 1 task: { 2 sequential sub-tasks: { create IAM role for serviceaccount "default/eks-iam-test", create serviceaccount "default/eks-iam-test" } }

[ℹ] building iamserviceaccount stack "eksctl-eks-iam-addon-iamserviceaccount-default-eks-iam-test"

[ℹ] deploying stack "eksctl-eks-iam-addon-iamserviceaccount-default-eks-iam-test"

[ℹ] created serviceaccount "default/eks-iam-test Now verify the service account have been created, list service account and describe the eks-iam-test service account.

$ kubectl get sa -n default

NAME SECRETS AGE

default 1 24m

eks-iam-test 1 2m59s

$kubectl describe sa eks-iam-test -n default

$Name: eks-iam-test

Namespace: default

Labels: <none>

Annotations: eks.amazonaws.com/role-arn: arn:aws:iam::REDACTED:role/eksctl-eks-iam-addon-iamserviceaccount-defau-Role1-1PKHL6R9PBETU

Image pull secrets: <none>

Mountable secrets: eks-iam-test-token-c8b7t

Tokens: eks-iam-test-token-c8b7t

Events: <none>Deploy a Kubernetes deployment

Now we will deploy a sample pod to verify that we can make some AWS API call inside the pod container.

Create a deployment manifest file for example eks-iam-test.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: eks-iam-test

spec:

replicas: 1

selector:

matchLabels:

app: eks-iam-test

template:

metadata:

labels:

app: eks-iam-test

spec:

serviceAccountName: eks-iam-test

containers:

- name: eks-iam-test

image: sdscello/awscli:latest

ports:

- containerPort: 80Deploy eks-iam-test.yaml deployment manifest file and verify the pod is running.

# Create eks-iam-test deployment

$ kubectl apply -f eks-iam-test.yaml -n default

# List pods to make the pod is running

$ kubectl get pods -n default

NAME READY STATUS RESTARTS AGE

eks-iam-test-69d4fbdbff-tk7ph 1/1 Running 0 54s

# Describe the pod and you should notice the two following environment variables AWS_ROLE_ARN, AWS_WEB_IDENTITY_TOKEN_FILE and the mounted volumes.

$ kubectl describe pod eks-iam-test-69d4fbdbff-tk7ph -n defaultTest running AWS cli inside the container to list the S3 bucket list which should work as expected.

$ kubectl exec -it eks-iam-test-69d4fbdbff-tk7ph -n default aws s3 ls

2019-09-16 11:08:18 REDACTED-***********************

2017-11-01 09:37:38 REDACTED-***********************

2018-06-27 09:06:16 thesis.kaliloudiaby.comNow test a bucket creation which shouldbe denied since the service account is associated with IAM readonly policy.

$ kubectl exec -it eks-iam-test-69d4fbdbff-tk7ph -n default

root@eks-iam-test-69d4fbdbff-tk7ph:/# aws s3api create-bucket --bucket eks-iam-test-revolight

An error occurred (AccessDenied) when calling the CreateBucket operation: Access DeniedNow let us explore some environment variables automatically injected namely AWSROLEARN and AWSWEBIDENTITYTOKENFILE variables.

$ kubectl exec -it eks-iam-test-69d4fbdbff-tk7ph -n default export

declare -x AWS_ROLE_ARN="arn:aws:iam::REDACTED:role/eksctl-eks-iam-addon-iamserviceaccount-defau-Role1-1PKHL6R9PBETU"

declare -x AWS_WEB_IDENTITY_TOKEN_FILE="/var/run/secrets/eks.amazonaws.com/serviceaccount/token"

declare -x DEBIAN_FRONTEND="noninteractive"

declare -x HOME="/root"

declare -x HOSTNAME="eks-iam-test-69d4fbdbff-tk7ph"

.......Cleanup

$ eksclt delete cluster --name eks-iam --region eu-north-1Hands on using Terraform

Please refers to post Terraform: Hands on Amazon EKS IAM Role support