Friday August 12th, 2016

CouchDB Database replication

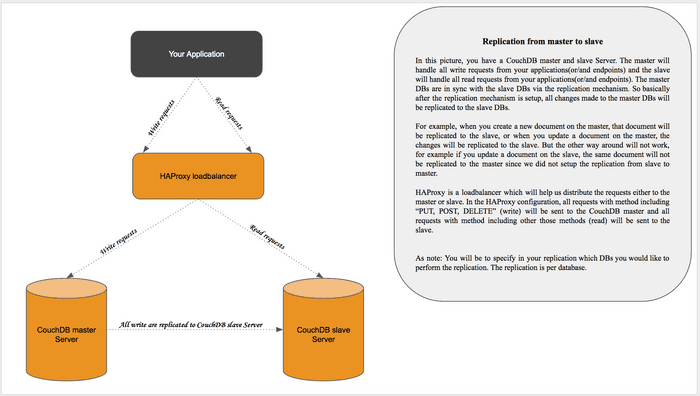

In this tutorial, we will setup couchDB replication. As for the servers, we will use one couchDB write servers and one couchDB read server what this means is that all read requests will be handled by the read server and all write requests will be handled by the write server. On top of those two servers, there will be a loadbalancer HAProxy.

CouchDB is a NoSQL document database and it is written in Erlang. For more in-depth documentation, please visit the apache couchdb documentation: http://couchdb.apache.org/

Now let's create the Vagranfile for spinning up 3 virtual machines e.g Couchdb (read and write servers) and an HAProxy loadbalancer

# -*- mode: ruby -*-

# vi: set ft=ruby :

VAGRANTFILE_API_VERSION = "2"

Vagrant.configure(VAGRANTFILE_API_VERSION) do |config|

# This will create the VM for couchdb writes

config.vm.define :couchdb_master do |master|

master.vm.box = "centos/7"

master.vm.hostname = "couchdb-master"

master.vm.network "private_network", ip: "192.168.50.200"

master.vm.synced_folder ".", "/home/vagrant/sync", disable: true

master.ssh.forward_agent = true

master.ssh.insert_key = false

master.ssh.private_key_path = ["~/.vagrant.d/insecure_private_key"]

end

# This will create the VM for couchdb reads

config.vm.define :couchdb_reads do |reads|

reads.vm.box = "centos/7"

reads.vm.hostname = "couchdb-reads"

reads.vm.network "private_network", ip: "192.168.50.201"

reads.vm.synced_folder ".", "/home/vagrant/sync", disabled: true

reads.ssh.forward_agent = true

reads.ssh.insert_key = false

reads.ssh.private_key_path = ["~/.vagrant.d/insecure_private_key" ]

end

# This will create the VM for the HAProxy loadbalancer

config.vm.define :haproxy_server do |haproxy|

haproxy.vm.box = "centos/7"

haproxy.vm.hostname = "haproxy-server"

haproxy.vm.network "private_network", ip: "192.168.50.202"

haproxy.vm.synced_folder ".", "/home/vagrant/sync", disabled: true

haproxy.ssh.forward_agent = true

haproxy.ssh.insert_key = false

haproxy.ssh.private_key_path = ["~/.vagrant.d/insecure_private_key" ]

end

endNow run the following to create all those 3 virtual machines. :

$ vagrant up In case you are interested in a live demo on how to create the virtual machines using vagrant in conjunction with the Vagrantfile. By the way, this video is not covering the entire tutorial, it just about creating the virtual machines using vagrant and Vagrantfile.

You may use the fullscreen so the the screen becomes a bit clear.

Now that the virtual machines are created, let's now install and configure couchdb write and read servers.

Couchdb write server

Run the following commands:

SSH to login to the Virtual Machine (couchdb_master)

$ vagrant ssh couchdb_masterUpdate the RPMs packages to the latest version

$ sudo yum install -y updateThen, install the development Tools which will install some packages required to compile the CouchDB source e.g C/C++ compiler and so on.

$ sudo yum -y groupinstall "Development Tools"We will now install some dependencies required to run CouchDB

$ sudo yum -y install libicu-devel curl-devel ncurses-devel libtool unixODBC unixODBC-devel wget

$ sudo yum -y install libxslt fop java-1.8.0-openjdk java-1.8.0-openjdk-devel openssl-devel vimNow let's install SpiderMonkey the mozilla's JavaScript engine written C/C++

$ sudo yum install -y js-develInstall erlang (required since couchdb is written in erlang)

$ sudo yum install -y epel-release

$ sudo yum install -y erlangDownload CouchDB from the source

$ wget http://www-eu.apache.org/dist/couchdb/source/1.6.1/apache-couchdb-1.6.1.tar.gzExtract, configure, build and install CouchDB source

$ tar xvfz apache-couchdb-1.6.1.tar.gz

$ cd apache-couchdb-1.6.1

$ ./configure --with-erlang=/usr/lib64/erlang/usr/include/

$ make

$ sudo make installNow create couchdb user in order to have the correct ownership on some couchdb related directories

$ sudo adduser --no-create-home couchdb

$ sudo chown -R couchdb:couchdb /usr/local/var/lib/couchdb

$ sudo chown -R couchdb:couchdb /usr/local/var/log/couchdb

$ sudo chown -R couchdb:couchdb /usr/local/var/run/couchdb

$ sudo chown -R couchdb:couchdb /usr/local/var/etc/couchdbNow create a link to to startup script which will allow us to start-stop-restart couchdb as well as looking the service status

$ sudo ln -sf /usr/local/etc/rc.d/couchdb /etc/init.d/couchdbNow change the bind_address to 0.0.0.0 and port to 5984 inside the local.ini (You can your favorite editor other than vim)

$ sudo vim /usr/local/etc/couchdb/local.ini[httpd]

port = 5984

bind_address = 0.0.0.0Start Couchdb

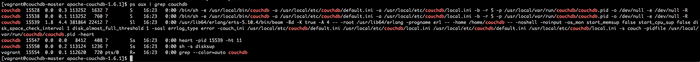

$ sudo /etc/init.d/couchdb startCheck to make sure couchdb process is running

$ ps aux | grep couchdbNow make sure CouchDB Futon interface is working, please visit http://192.168.50.200:5984/_utils/

Video on how to setup CouchDB server. You may use the fullscreen in order for the screen to become a bit clear.

CouchDB Reads Server

SSH to login to the couchdb reads

$ vagrant ssh couchdb_readsRepeat the same steps done in the CouchDB writes Server, just the steps above

Or

In case you will be using installing couchdb so often as for development purpose, you can use vagrant to package the box from the couchdb_master VM, basically vagrant will create a new box which already has couchDB installed and configured. You will then replace the box name in the Vagrantfile.

How to do it

$ vagrant halt couchdb_master

$ vagrant package couchdb_master --output couchdb.box

$ vagrant box add 'couchdb-server' file://path_to_couchdb_boxNow change the box name in the Vagrantfile

# -*- mode: ruby -*-

# vi: set ft=ruby :

VAGRANTFILE_API_VERSION = "2"

Vagrant.configure(VAGRANTFILE_API_VERSION) do |config|

# This will create the VM for couchdb writes

config.vm.define :couchdb_master do |master|

# master.vm.box = "centos/7"

master.vm.box = "couchdb-server"

master.vm.hostname = "couchdb-master"

master.vm.network "private_network", ip: "192.168.50.200"

master.vm.synced_folder ".", "/home/vagrant/sync", disabled: true

master.ssh.forward_agent = true

master.ssh.insert_key = false

master.ssh.private_key_path = ["~/.vagrant.d/insecure_private_key"]

end

# This will create the VM for couchdb reads

config.vm.define :couchdb_reads do |reads|

# reads.vm.box = "centos/7"

reads.vm.box = "couchdb-server"

reads.vm.hostname = "couchdb-reads"

reads.vm.network "private_network", ip: "192.168.50.201"

reads.vm.synced_folder ".", "/home/vagrant/sync", disabled: true

reads.ssh.forward_agent = true

reads.ssh.insert_key = false

reads.ssh.private_key_path = ["~/.vagrant.d/insecure_private_key" ]

#reads.vm.provision "shell", path: "couchdb_bootstrap.sh"

end

# This will create the VM for the HAProxy loadbalancer

config.vm.define :haproxy_server do |haproxy|

haproxy.vm.box = "centos/7"

haproxy.vm.hostname = "haproxy-server"

haproxy.vm.network "private_network", ip: "192.168.50.202"

haproxy.vm.synced_folder ".", "/home/vagrant/sync", disabled: true

haproxy.ssh.forward_agent = true

haproxy.ssh.insert_key = false

haproxy.ssh.private_key_path = ["~/.vagrant.d/insecure_private_key" ]

end

endNow by running $ vagrant up couchdbmaster or $ vagrant up couchdbreads , This will now use the box named "couchdb-server" in which Couchdb is already installed and configured. Doing this will help you become faster on creating many couchdb servers in short amount of time.

HAProxy Server

HAProxy stands for High Availability Proxy and it is an attractive open source TCP and HTTP loadbalancer. You can use HAProxy to distribute the workload across many servers. I am not gonna cover all aspects of HAProxy for more in-depth documentation please visit http://www.haproxy.org/#docs

Let's start by installing HAProxy

$ sudo yum -y install haproxyNow modify the haproxy.cfg configuration file $ sudo vim /etc/haproxy/haproxy.cfg

#---------------------------------------------------------------------

# Example configuration for a possible web application. See the

# full configuration options online.

#

# http://haproxy.1wt.eu/download/1.4/doc/configuration.txt

#

#---------------------------------------------------------------------

#---------------------------------------------------------------------

# Global settings

#---------------------------------------------------------------------

global

# to have these messages end up in /var/log/haproxy.log you will

# need to:

#

# 1) configure syslog to accept network log events. This is done

# by adding the '-r' option to the SYSLOGD_OPTIONS in

# /etc/sysconfig/syslog

#

# 2) configure local2 events to go to the /var/log/haproxy.log

# file. A line like the following can be added to

# /etc/sysconfig/syslog

#

# local2.* /var/log/haproxy.log

#

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

# turn on stats unix socket

stats socket /var/lib/haproxy/stats

#---------------------------------------------------------------------

# common defaults that all the 'listen' and 'backend' sections will

# use if not designated in their block

#---------------------------------------------------------------------

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

#---------------------------------------------------------------------

# main frontend which proxys to the backends

#---------------------------------------------------------------------

frontend couchdb_loadbalancer *:5984

acl url_couchdb path_beg /

acl master_methods method POST DELETE PUT

use_backend couchdb_master_backend if master_methods url_couchdb

use_backend couchdb_read_backend if !master_methods url_couchdb

#---------------------------------------------------------------------

# Couchdb master backend

#---------------------------------------------------------------------

backend couchdb_master_backend

option httpchk GET / HTTP/1.0

http-check expect rstring couchdb

server couchdb-master 192.168.50.200:5984 weight 1 maxconn 512 check

#---------------------------------------------------------------------

# Couchdb read server

#---------------------------------------------------------------------

backend couchdb_read_backend

option httpchk GET / HTTP/1.0

http-check expect rstring couchdb

server couchdb-read 192.168.50.201:5984 weight 1 maxconn 512 check

listen stats :9000

mode http

stats enable

stats hide-version

stats realm Haproxy\ Statistics

stats uri /

stats auth haproxy:haproxyLet's start HAProxy

$ sudo systemctl enable haproxy

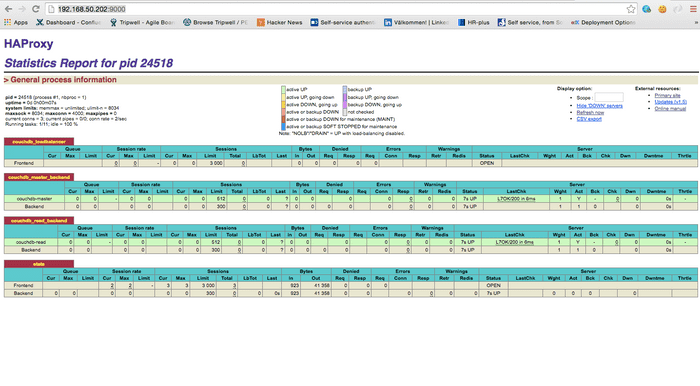

$ sudo systemctl start haproxyAnd now visit the web interface http://192.168.50.202:9000/

As you can notice in the screenshot above, couchdb-master and couchdb-read servers are up and running. This is the HAProxy stats web interface, so basically HAProxy will be responsible for directing write requests to couchdb-master and read requests to couchdb-read. Moreover, HAProxy stats provides the servers status, we can see above, the green color indicates both couchdb read and write servers are up and running, otherwise the color will red.

Setup Replication

The goal of this replication mechanism is to synchronise the couchdb-master databases to the couchdb-reads databases. The replication will only copy the last revision version of a couchdb document, we make sure all changes on the master will be replicated to the read server.

Create two databases - cars and books on the master server

SSH to couchdb_master server

$ vagrant ssh couchdb_masterCreate two databases - cars and books

$ curl -X PUT http://127.0.0.1:5984/cars

$ curl -X PUT http://127.0.0.1:5984/booksMake sure the databases have been created by running the following command:

$ curl -X GET http://localhost:5984/_all_dbs

["_replicator","_users","books","cars"]Now on the couchdb_reads server run the following command to trigger the replication

SSH to couchdb_read server

$ vagrant ssh couchdb_readsMake sure when you fetch the list of databases you should only see "_replicator, _users"

$ curl -X GET http://localhost:5984/_all_dbs

["_replicator","_users"]Now let's setup the replication for thecars databases which means the couchdb master will replicate the database cars to the couchdb reads. Basically after the replication the the cars DB in the master and the cars DB in the reads server will be in sync.

Create replication for cars DB

$ curl -H 'Content-Type: application/json' -X POST http://localhost:5984/_replicate -d ' {"source": "http://192.168.50.200:5984/cars”, "target": “cars”, "create_target": true, "continuous": true} 'Now fetch all DB and you should see the cars DB in the list

$ curl -X GET http://localhost:5984/_all_dbs

["_replicator","_users", "cars"]Create replication for books DB

$ curl -H 'Content-Type: application/json' -X POST http://localhost:5984/_replicate -d ' {"source": "http://192.168.50.200:5984/cars”, "target": “cars”, "create_target": true, "continuous": true} 'Now fetch all DB and you should see the books DB in the list

$ curl -X GET http://localhost:5984/_all_dbs

["_replicator","_users", "cars", "books"]Watch the video on how to setup the replication

Extra: How to automate the replication to be triggered

It will be nice to have a mechanism to check that your replications are still on. From experience, the replication sometime can be stopped for some reasons. The goal will be to setup a cronjob checking the replication on cars and books databases and in case the replication is not on the cronjob will trigger the replication which in turn will synchronise your DBs again.

Before proceeding, create a server admin user on both couchdbmaster and couchdbreads server

On couchdb master

$ vagrant ssh couchdb_master

$ sudo chown -R couchdb:couchdb /usr/local/{lib,etc}/couchdb /usr/local/var/{lib,log,run}/couchdb

$ curl -X PUT localhost:5984/_config/admins/adminuser -d '"mypass"'On couchdb reads

$ vagrant ssh couchdb_reads

$ sudo chown -R couchdb:couchdb /usr/local/{lib,etc}/couchdb /usr/local/var/{lib,log,run}/couchdb

$ curl -X PUT localhost:5984/_config/admins/adminuser -d '"mypass"'SSH to the couchdb_reads VM, note that the following will be done on the couchdb reads server

$ vagrant ssh couchdb_readsInstall nodejs

$ sudo yum install nodejs npmInstall couch-replicator-api (npm pacakge)

$ sudo npm install couch-replicator-api -gCreate a javascript file called book_replication.js which will be checking the replication and fixing automatically if the replication is not on.

$ sudo mkdir /usr/local/src/couchdb-replication

$ sudo chown -R couchdb:couchdb /usr/local/src/couchdb-replication

$ sudo vim /usr/local/src/couchdb-replication/book_replication.jsContent of the book_replication.js

const CouchdbReplicator = require('couch-replicator-api')

replicator_book = new CouchdbReplicator('http://localhost:5984', 'adminuser', 'mypass', 'books')

book_replication_doc = {

"source": "http://adminuser:mypass@192.168.59.200:5984/books",

"target": "books",

"continuous": true};

function dataCallback(err, data) {

if (err) {

replicator_book.put(book_replication_doc, putCallback);

}

}

function putCallback(err, data) {

if (err) {

console.log('When error happen in this step, a human intervention is required, you may setup a Nagios server or AWS CloudWatch to check on this replication')

}

else {

console.log('The replication has been trigger');

}

}

replicator_book.status(dataCallback);Create a javascript file called car_replication.js which will be checking the replication and fixing automatically if the replication is not on.

vim /usr/local/src/couchdb-replication/book_replication.jsContent

const CouchdbReplicator = require('couch-replicator-api')

replicator_car = new CouchdbReplicator('http://localhost:5984', 'adminuser', 'mypass', 'cars')

car_replication_doc = {

"source": "http://adminuser:mypass@192.168.50.200:5984/cars",

"target": "cars",

"continuous": true};

function dataCallback(err, data) {

if (err) {

replicator_car.put(car_replication_doc, putCallback);

}

}

function putCallback(err, data) {

if (err) {

console.log('When error happen in this step, a human intervention is required, you may setup a Nagios server or AWS CloudWatch to check on this replication')

}

else {

console.log('The replication has been trigger');

}

}

replicator_car.status(dataCallback);I've setup this in conjunction with AWS CloudWatch, I got notified in case the functions above could not fix the replications automatically. These function will be running as cronjob every 5 minutes for example.

Create cronjob

$ sudo vim /etc/cron.d/book_replication MAILTO=your_email_address

HOME=/root/

*/5 * * * * root /usr/bin/node book_replication.jsCreate cronjob

$ sudo vim /etc/cron.d/car_replication MAILTO=your_email_address

HOME=/root/

*/5 * * * * root /usr/bin/node car_replication.jsHope, this will be helpful